Float Like an IP, Sting Like a Bee: Azure’s Floating IP Feature and Network Security Groups

Azure Load Balancers have a feature called Floating IP that allows the use of the same network port on one target host by different services. Azure AKS in certain configurations uses this feature automatically when exposing services outside of the cluster – which introduces an interesting network configuration that is relevant for your exposure analysis.

Azure Load Balancing Basics

Azure offers various load balancing options, ranging from CDN-like features to standard network-level load balancing, where Azure Application Gateway (focus on HTTP load balancing) and Azure Load Balancer (Layer 4 load balancing) offer functionality comparable to other SaaS load balancing offerings.

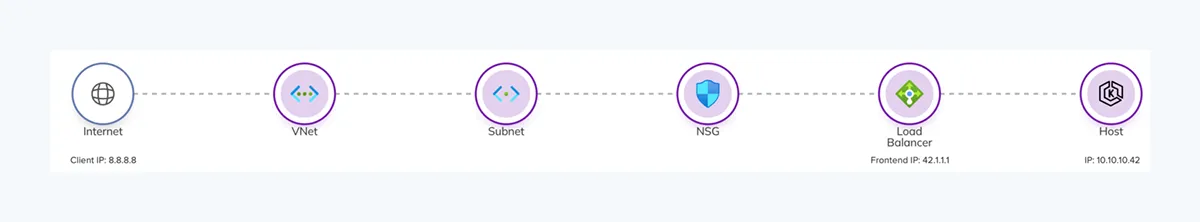

A typical Load Balancer set up would include a VNet/subnet, Network Security Group, the Load Balancer, and a target host or host group as illustrated below:

Load Balancers can forward connections based on Load Balancing Rules or Inbound NAT Rules. Both options apply destination NAT to the forwarded packets while otherwise offering a different feature set not as relevant for this blogpost.

If we look at the network packets arriving at the target host, the source IP address will be 8.8.8.8 while the destination IP will be the private IP of the host, 10.10.10.42.

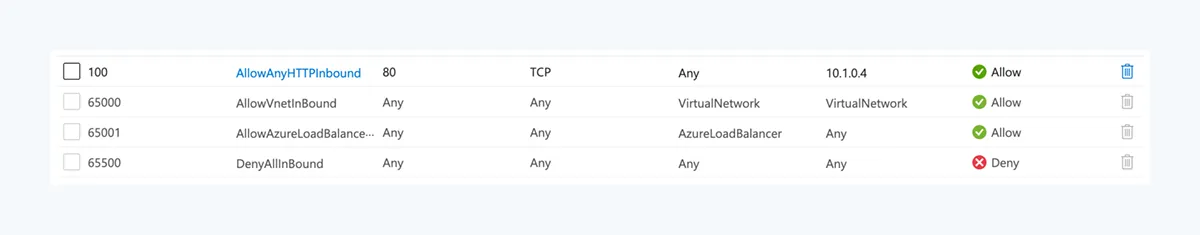

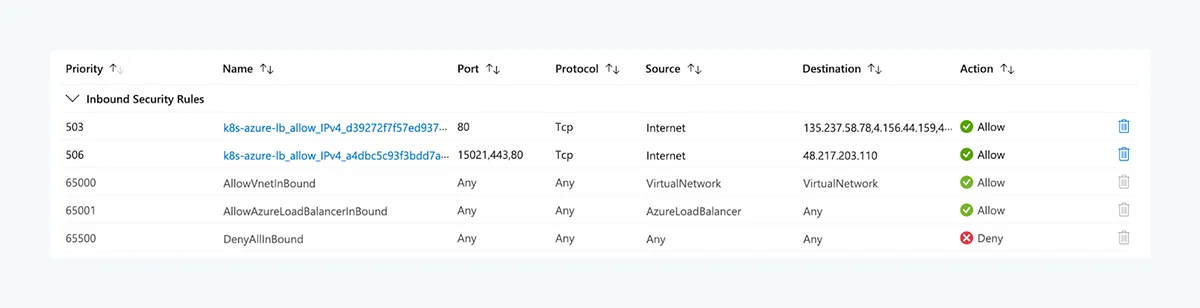

With a NSG looking like below, we are all set to receive traffic on our load balanced target host(s):

Enter Floating IP

Both Load Balancing and Inbound NAT rules have the option to enable Floating IP for the specific rule:

The help text does not provide a clear understanding what Floating IP is right away:

Without Floating IP, Azure exposes a traditional load balancing IP address mapping scheme for ease of use (the VM instances’ IP). Enabling Floating IP changes the IP address mapping to the Frontend IP of the load balancer to allow for additional flexibility.

However, Azure’s managed Kubernetes service, AKS, uses Load Balancers with Floating IP enabled by default and automatically orchestrated in the background when exposing services for ingress. While this sounds automated and helpful enough, however, the resulting Network Security Group configuration can leave you slightly confused:

At first, this configuration might look straightforward but once you test the reachability of your load balanced service from the Internet you might ask yourself: How is Internet ingress even possible?

The following list breaks the different rules down:

- The ruleset allows ingress traffic from Internet to a few public IP addresses.

- Those public IP addresses are assigned to the Load Balancer, not the target nodes.

- No other rules allow any traffic from Internet.

- SNAT rules are not supported by Azure Load Balancer, i.e. the original public IP address is preserved and visible to the target node.

- No rule allows any ingress to the private node IPs.

This automatically makes you wonder about the specifics of the Service Tags leveraged by the rules. Service Tags represent ranges of IP addresses that are maintained and updated by Azure as necessary. You can even inspect those ranges or use them for your own automation via REST or downloading a JSON file.

Two questions about Service Tags come to mind when looking at the NSG above:

- Does the VirtualNetwork service tag potentially include the public frontend IP addresses of the load balancer, as the load balancer is part of the VNet?

- Does the AzureLoadBalancer service tag include the public frontend IP addresses of the load balancer?

If either of those questions would be answered with yes, the NSG would make sense. Unfortunately AzureLoadBalancer only includes the virtual IP address of the load balancer used for health probes and not the frontend IPs. VirtualNetwork includes various IP ranges depending on your configuration, however, also not the Load Balancer frontend IPs.

One particular aspect of NSGs sounds helpful to explain the rules above at first:

Network security groups are processed after Azure translates a public IP address to a private IP address for inbound traffic

If the frontend IP would be mapped to the target host in some way, the NSG evaluation logic would translate the public IP to the Host IP first, making it fall under the VirtualNetwork service tag – which was allowed by the NSG rules. However, if we create a configuration of

- Load Balancing rule with floating IP enabled and

- NSG allowing ingress access to only the private IP,

the ingress connection is not possible anymore, ruling out this explanation.

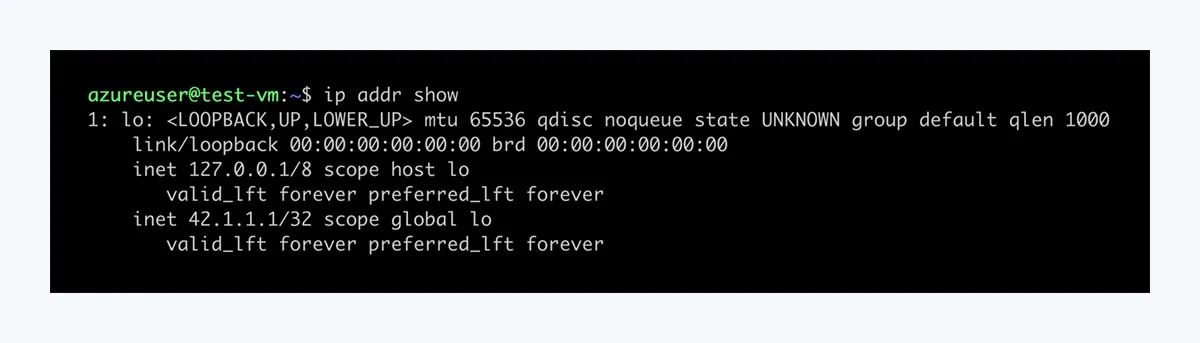

To understand why Internet ingress traffic is possible, we need to look at the implementation of Floating IP. In order for Floating IPs to work, the the public Load Balancer IP addresses must be assigned to loopback interfaces on the target hosts:

The guest OS must also be configured to accept network traffic to any IP address on the host, not just the one configured on the network interface that the traffic is received on.

Keeping this in mind, looking at the Network Security Group above also explains why Internet ingress is possible:

- There is no change of destination address taking place on the load balancer.

- The load balancer forwards any network package ‘as is’, preserving both the public source and destination IP address.

- This means the network packets arriving at the host and processed by the NSG have source IP of 8.8.8.8 and destination IP of 42.1.1.1.

- This matches the NSG rules allowing the ingress traffic.

Impact & Conclusions

This understanding is relevant to make sure you have a correct overview of your environment. Otherwise you may have incorrectly classified some target hosts privateor non-internet-exposed either by analysts or network analysis tools, which can affect your vulnerability management triaging and subsequent patching priority, potentially leaving externally exposed hosts vulnerable for longer than you would want to.

Featured Blog Posts

Explore our latest blog posts on cybersecurity vulnerabilities.

Ready to Reduce Cloud Security Noise and Act Faster?

Discover the power of Averlon’s AI-driven insights. Identify and prioritize real threats faster and drive a swift, targeted response to regain control of your cloud. Shrink the time to resolution for critical risk by up to 90%.

%20Vulnerability.png)